%pip install -Uqq googlemapsNote: you may need to restart the kernel to use updated packages.Naomi Lago

September 6, 2023

Recently I faced a project that, in the Exploratory Data Analysis (EDA) step, I decided to plot a choropleth map that would show the population distribution by Brazilian states. In order to do so, I prior get the coordinates and follow by defining the libraries for the plot.

Keeping in mind that there are two main processes, in this post I describe how I went through the process of getting the data by using a Google API at Google Maps Platform.

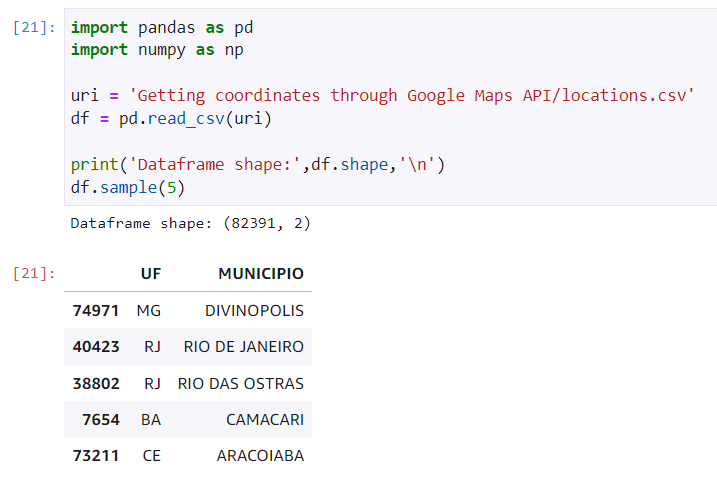

For this content, I’ll be using a generic dataset containing two columns: UF and MUNICIPIO that refers to state and city respectfully.

The shape is

(82391, 2).

Sample with 5 entries

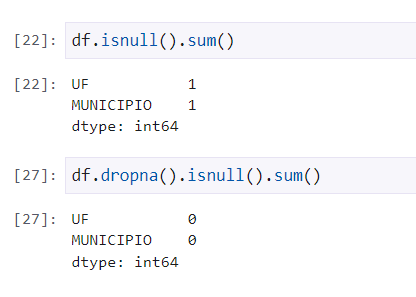

We want our query to the API request to do not contain null values and, for that reason, I’ll be verifying how many are unfilled and delete them in case are a fine amount.

Handling null values

There were only one value in each column and they were removed. Now, let’s make the API integration.

Before starting, it’s important to make sure that the library googlemaps is installed - what I’ll be responsible for the integration. Therefore, I can run the following snippet on my terminal:

After configuring your cloud environment at Google, grab your API key. I recommend storing it in a file .json instead of inserting directly to your code. Another option is using the environment variables on your machine. For this example, I stored in my path /credentials/api_keys.json with the following format:

JSON format for storing the API key

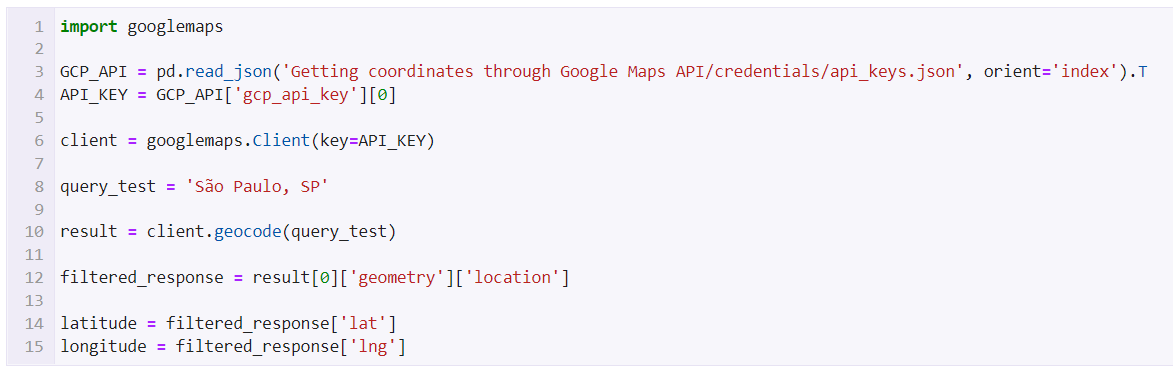

By doing that, we can now make our first request. Initially, I’ll be requesting only one static defined request as for ‘São Paulo, SP’ and store the results in the latitude and longitude variables.

Sample request

In te first lines, after importing the googlemaps library, I read the file where the key was stored and authenticate through the client. I also define the query and wait for the respons - storing in a variable called result. In order to get the proper coordinates, I can filter by getting only these attributes.

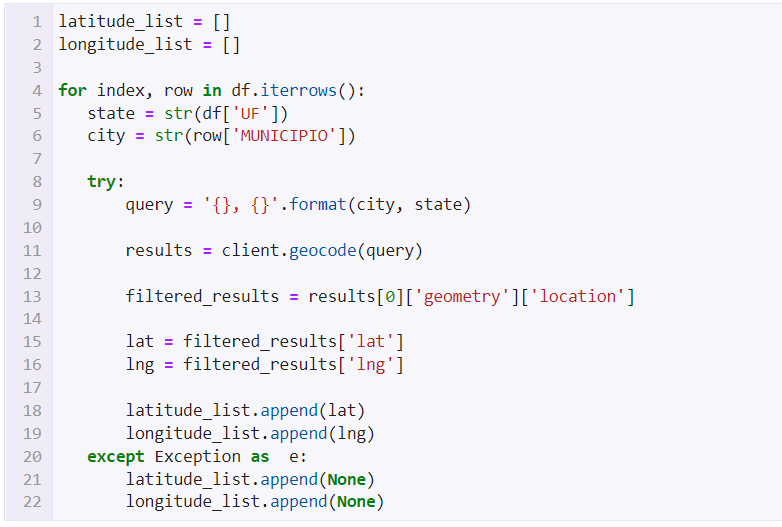

Now that the API was tested and integrated - the core of our task - we can keep going by collecting for all the entries of the dataset.

The idea now consists in get through each line in our entries and use city and state as origins for the queries. In order to do that, there was defined two empty lists to store the responses on latitude and longitude and the same previous logic was set in a loop; that’ll be responsible for getting cities and states of each line, create the query, make the request and return the data we need.

Running through every entry and storing the results

Note that I added a try/except just in case a request on a specific line doesn’t find the coordinates, fill with a null value and don’t stop the run - that it’ll make the code crash.

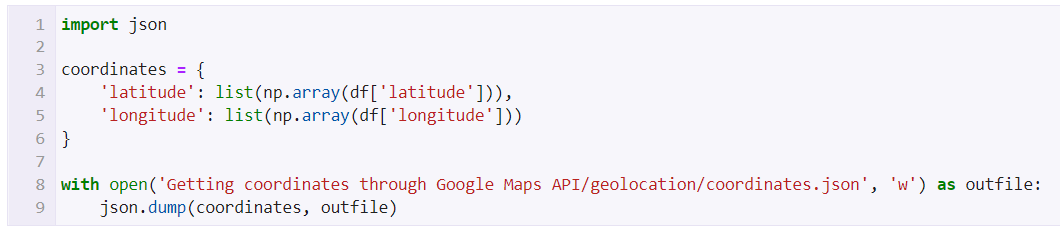

In the end, we’ll have the coordinated stored via the two lists we defined earlier. To prevent this data to be lost and also use them in another place on another time, I’ll be exporting the results in a .json file.

Saving the results

So, a dictionary as defined containig the two lists. We can now successfully treat our data and have this task done.

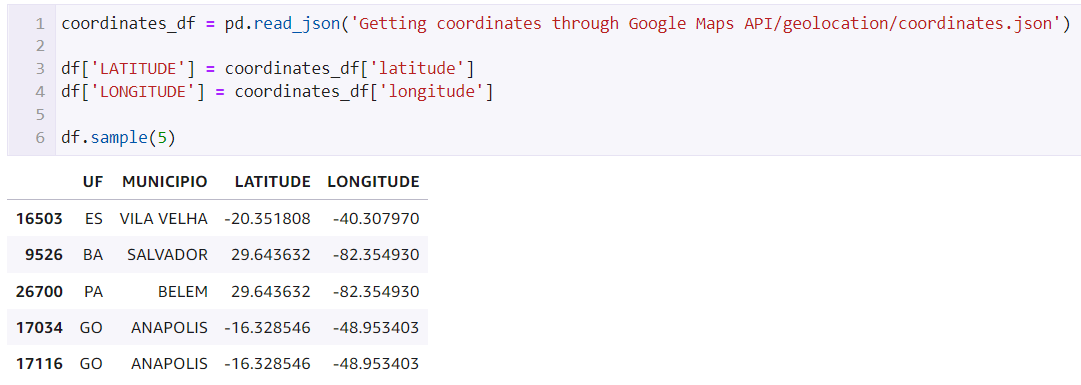

Now that we have a json file with the coordinates, we can initially import this file as dataframe and concatenate in our main one:

Joining the results to the main dataframe

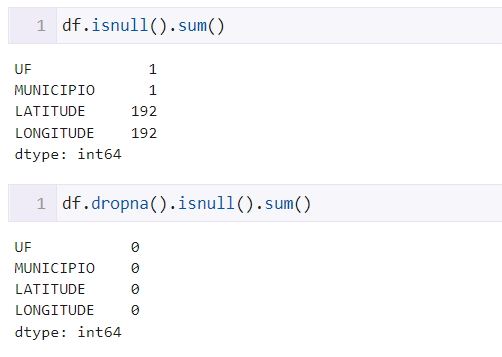

Now, let’s verify if there are any null value and remove them just in case. These values are coming when the request didn’t return any coordinates, falling in the exception and filling with None. We saw this here.

Handling null values from the API

As we can see, we got 192 cases where it didn’t return any result. They were removed as the proportion is still small compared to the dataframe size.

In the end, we were able to complete the task and now we have two new columns: latitude and longitude. We can use them to further geographic analyses and ploting te coordinates in maps.

Final dataframe with results

Python: A programming language that allows working fastly and integrate systenms in a optimized way.

Pandas: A tool fast, powerful, flexible and easy to use for analyses and data wrangling - open source and built on top of Python.

NumPy: A Python library that offers a multidimensional matrix object, many derived objects and a variety of routines for matrices operations.

Google Maps Platform: A Cloud Computing platform by Google that offers mapping services, including geolocation APIs.

Thanks for reading, I’ll see you in the next one ⭐